Imagine taking a dusty portrait of your great-grandfather and transforming it into a video where he delivers a heartfelt speech at your wedding. Or animating a static company logo into a charismatic spokesperson that interacts with customers in real time. This isn’t science fiction—it’s the promise of OmniHuman-1, ByteDance’s latest AI model that’s rewriting the rules of digital content creation. But beneath its dazzling capabilities lies a darker truth: we’re entering an era where seeing is no longer believing.

The Rise of OmniHuman-1: How It Works (And Why It’s Different)

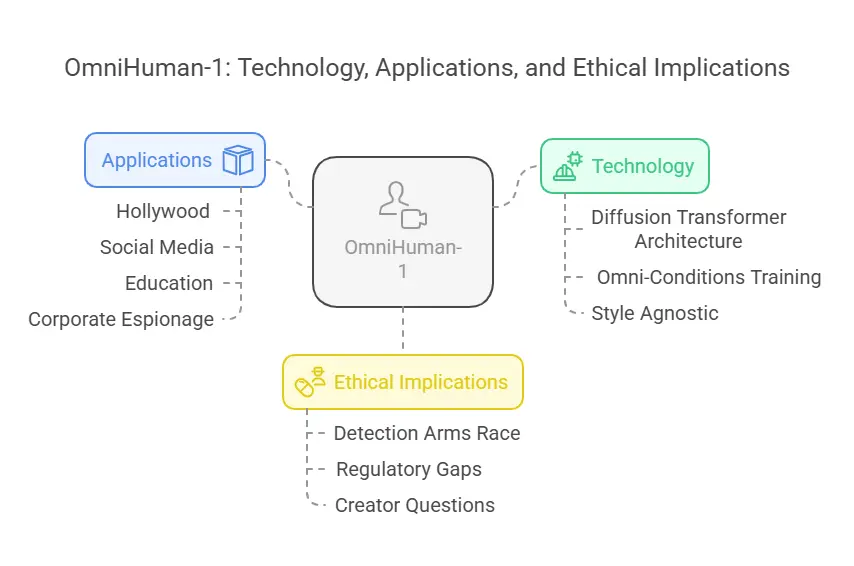

At its core, OmniHuman-1 is a multimodal video-generation framework that uses a single reference image and motion signals—audio, video clips, or pose data—to create hyper-realistic human animations. Unlike predecessors that focused on isolated facial movements or stiff full-body renders, this model masters the nuances of natural motion, from the flutter of an eyelid to the sway of a hip during a dance (1) (8).

How to Start Freelancing with no Experience 2025

The Tech Behind the Magic

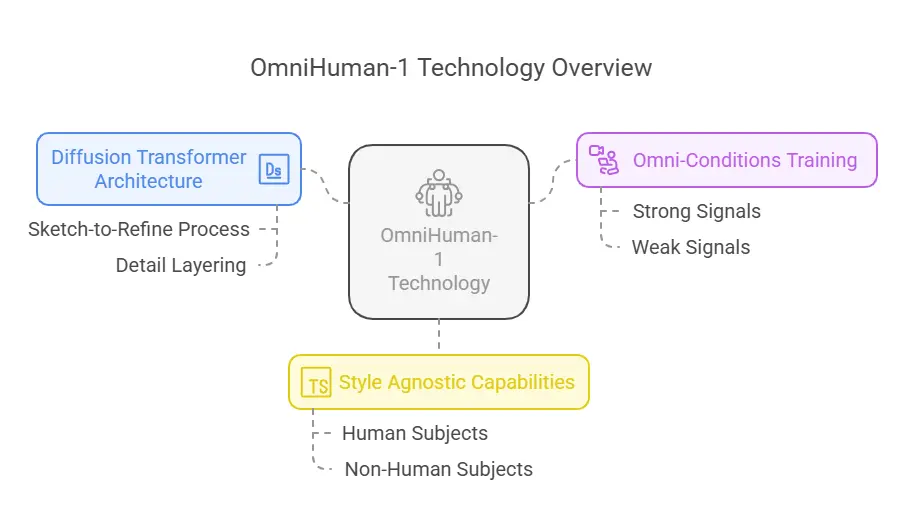

- Diffusion Transformer (DiT) Architecture: Borrowing from cutting-edge image generators, OmniHuman-1 employs a “sketch-to-refine” process. It starts with rough motion predictions and iteratively layers details—textures, lighting, micro-expressions—until the output mirrors organic movement (1) (14).

- Omni-Conditions Training: The model ingests 19,000 hours of video data, blending “strong” signals (precise pose tracking) with “weak” ones (ambient audio cues). This hybrid approach allows it to generalize across scenarios, whether syncing a TED Talk’s hand gestures or mimicking a pop star’s choreography (1) (10).

- Style Agnostic: Whether fed a Renaissance painting, a cartoon character, or a grainy selfie, OmniHuman-1 adapts. It even handles non-human subjects, like animating a talking cat with eerily plausible paw gestures (2) (9).

Key Features That Set OmniHuman-1 Apart

| Feature | Traditional Models | OmniHuman-1 |

|---|---|---|

| Input Flexibility | Single modality (e.g., audio-only) | Audio, video, pose, or hybrid inputs |

| Output Realism | Uncanny valley artifacts | Cinema-grade FVD scores (lower = better) |

| Body Control | Limited to face/upper torso | Full-body precision, including hands |

| Training Efficiency | Requires curated, “perfect” data | Utilizes imperfect or noisy datasets |

| Ethical Safeguards | Minimal | None disclosed |

Table 1: OmniHuman-1 vs. conventional AI video tools. Sources: [ByteDance research paper], [TechCrunch analysis].

How to Make Money Online for Beginners in 2025

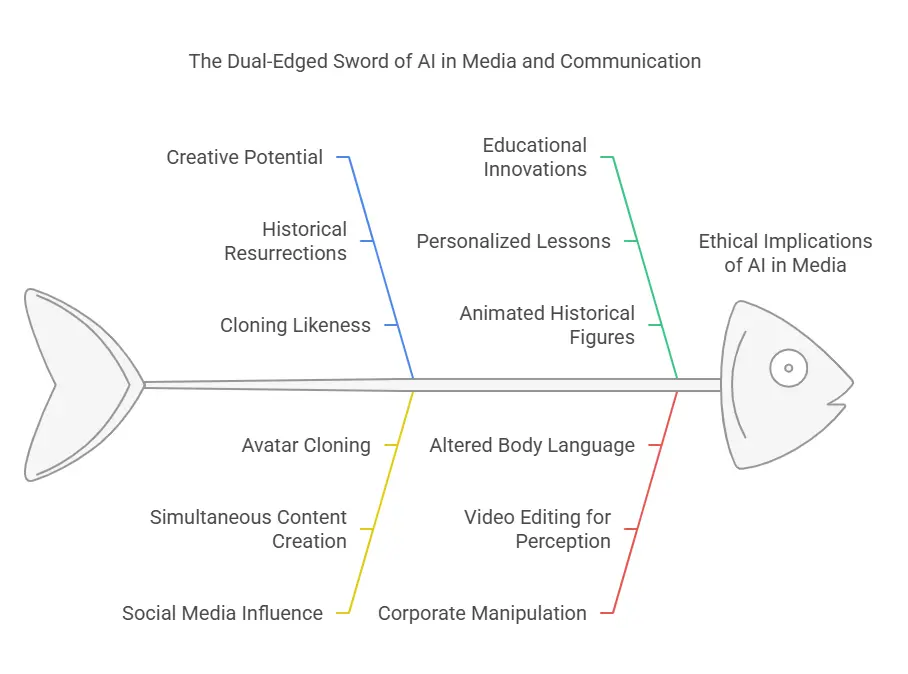

Real-World Applications: From Creativity to Chaos

- Hollywood 2.0: Directors can resurrect historical figures or de-age actors without costly motion capture. One demo even shows an AI-generated Albert Einstein explaining quantum physics—in fluent French (14).

- Social Media Gold: Influencers could clone their likeness to star in 10 TikToks simultaneously. Imagine a fitness guru hosting live workouts in five time zones—all while sleeping (8) (16).

- Education Reimagined: Teachers might deploy animated avatars of Marie Curie or MLK Jr. to deliver personalized lessons. But what happens when a deepfaked politician starts “endorsing” fringe ideologies? (3) (16)

- Corporate Espionage: A leaked demo reveals OmniHuman-1 editing existing videos to alter body language. Picture a CEO’s nervous fidgeting transformed into confident gestures during an earnings call (1) (10).

The Ethical Quagmire: When Innovation Outpaces Regulation

ByteDance’s demos are undeniably impressive, but they’ve ignited a firestorm. In South Korea, deepfake pornography now accounts for 40% of cybercrimes, while Hong Kong recently saw a $25 million scam involving AI-generated executives (16). OmniHuman-1’s accessibility—requiring just one photo and an audio clip—could democratize synthetic media, for better and worse.

The Detection Arms Race

Tech giants like Google and Meta are scrambling to deploy tools like SynthID and Video Seal, which watermark AI content. But as Northwestern’s Matt Groh warns, “We’re in a game of whack-a-mole. Each detection breakthrough is met with a more sophisticated generator” (5).

Surfer SEO vs Ahrefs: Which SEO Tool is Best for 2025?

The Road Ahead: Can We Trust Our Eyes?

ByteDance hasn’t announced a public release date, but leaked samples already circulate on platforms like X (formerly Twitter). One viral clip shows a TED speaker whose gestures align so perfectly with her speech that even experts struggle to spot the AI touch (8) (10).

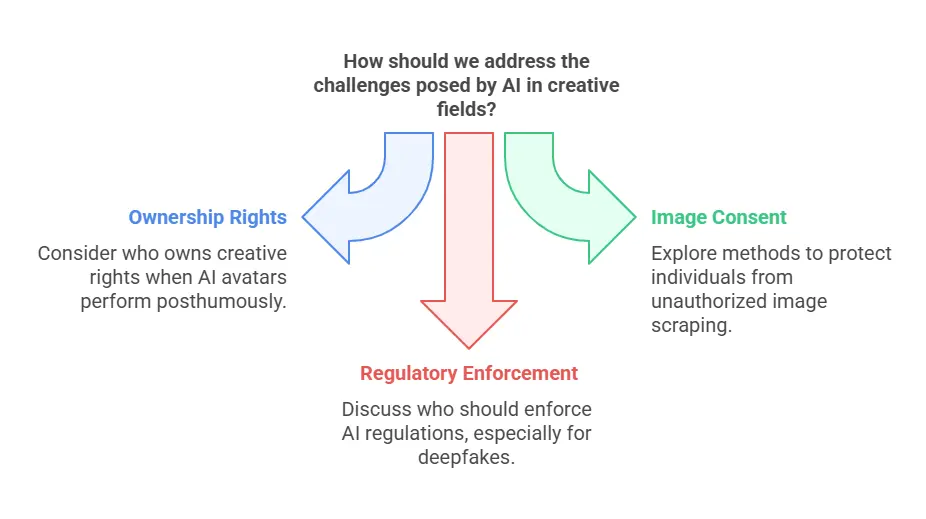

3 Questions Every Creator Should Ask

- Authenticity vs. Artistry: If a poet’s avatar performs their work posthumously, who owns the creative rights?

- Consent in the AI Age: How do we protect individuals whose images are scraped from social media to train these models?

- Regulatory Gaps: The EU’s Artificial Intelligence Act labels deepfakes as “high-risk,” but enforcement remains spotty. Who polices this borderless tech? (16)

Final Thoughts: The Double-Edged Sword of Progress

OmniHuman-1 isn’t just a tool—it’s a cultural inflection point. For filmmakers and educators, it’s a canvas limited only by imagination. For disinformation campaigns, it’s a weapon of mass persuasion. As we marvel at Einstein’s digital resurrection or a brand’s shape-shifting mascot, let’s also demand transparency. After all, in a world where anyone can be reanimated, truth becomes the ultimate casualty.

What’s Next?

- For Creators: Experiment with ByteDance’s Jimeng AI platform (if you can access it).

- For Policymakers: Audit existing laws—yesterday.

- For Everyone Else: Assume that video evidence is fungible. Trust, but verify.

Difference Between Freelancing and Outsourcing: Benefits, and How to Choose